“AI tends to outperform not only humans, but human-AI teams. One study, a 2019 preprint meta-analysis conducted by researchers at the University of Washington and Microsoft Research, looked at a number of previous studies in which decisions made by AI systems alone were assessed against “AI-assisted” decisions made by humans. In every case, they found that the AI operating on its own performed better than the AI-human team.”

From the book Futureproof of Kevin Roose

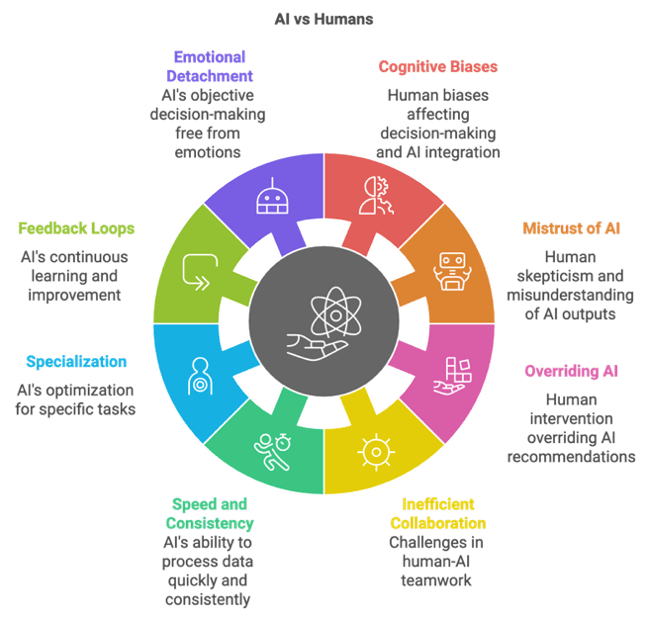

The phenomenon of artificial intelligence (AI) systems outperforming not only humans but also human-AI teams is both fascinating and significant. At first glance, it might seem paradoxical that AI, a human creation, can surpass its creators even when working collaboratively. However, several critical factors explain this phenomenon:

- Cognitive Biases in Human Decision-Making: Humans are inherently susceptible to cognitive biases that can hinder effective decision-making, especially in contexts requiring rationality and objectivity:

- Overconfidence Bias: Humans often overestimate their knowledge or abilities, which can lead them to disregard or undervalue AI recommendations. For example, in financial trading, a trader may dismiss algorithmic advice due to an inflated trust in their own market predictions, leading to suboptimal outcomes.

- Confirmation Bias: People tend to prioritize information that aligns with their preexisting beliefs, disregarding contradictory evidence provided by AI. In medical diagnostics, a doctor might favor their initial diagnosis despite AI indicating a different condition, affecting patient outcomes.

- Anchoring Effect: Initial impressions can exert disproportionate influence on human decisions. For example, in negotiations, a starting price often anchors perceptions, making it harder to embrace more dynamic and accurate AI recommendations.

- Mistrust and Misinterpretation of AI Outputs: AI insights are sometimes undervalued due to a combination of mistrust and difficulty in understanding its processes:

- Lack of Transparency: The «black-box» nature of some AI models makes their decision-making opaque and harder to trust.

- Complexity of AI Models: Advanced AI algorithms, such as those using deep learning, often involve intricate computations that users find challenging to interpret, leading to misinterpretation or skepticism.

- Probabilistic Reasoning Issues: Humans often struggle with interpreting probabilities, often assigning inaccurate weights to risks. For instance, a forecast of a 30% chance of rain may be seen as «unlikely» rather than a meaningful risk.

- Overriding or Ignoring AI Recommendations: Despite their strengths, AI systems can be overruled by humans, often to their detriment:

- Human Intervention: In collaborative settings, human operators sometimes override AI decisions based on intuition, as seen in aviation, where pilots may disable automated systems, occasionally leading to accidents.

- Emotional Factors: Emotions can influence decisions, leading to choices that are less rational than the AI’s data-driven recommendations. For instance, in hiring processes, personal biases might lead to favoring a candidate over one suggested by an AI system that objectively matches the job criteria better.

- Inefficient Human-AI Collaboration: The potential of AI is often hindered by collaboration challenges stemming from usability and human limitations:

- Interface and Usability Issues: Complex and unintuitive AI interfaces can lead to user errors or misinterpretation of data, reducing the overall efficiency.

- Cognitive Load: Managing AI inputs alongside other information can overwhelm users, leading to poorer decision-making. In high-stress environments like air traffic control, additional AI data might overload operators.

- Insufficient Training: Lack of adequate training to interpret AI outputs frequently results in misuse or neglect of valuable insights.

- Speed and Consistency of AI: AI systems excel in processing large amounts of data and delivering consistent results, qualities that give them a distinct advantage:

- Processing Large Data Volumes: AI can rapidly process vast datasets, identifying patterns or anomalies that humans might overlook. For example, in cybersecurity, AI can detect threats across millions of data points in real-time.

- Consistency: Unlike humans, AI is not affected by fatigue, mood swings, or external distractions. Its decisions remain uniform under comparable circumstances.

- Specialization of AI Systems: AI systems are designed to excel at specific tasks, often achieving levels of precision and efficiency unattainable for humans:

- Purpose-Built Excellence: AI systems, such as those used in chess, focus entirely on winning strategies without being sidetracked by emotional or cognitive biases.

- Singular Focus: I AI doesn’t get sidetracked by irrelevant factors, staying focused on the parameters it’s programmed to consider. In customer service, AI chatbots maintain unwavering focus on resolving queries, free from the emotional variables that affect human performance.

- Feedback Loops and Learning: One of the defining advantages of AI is its ability to adapt and improve continuously:

- Continuous Improvement: Many AI systems use machine learning to improve over time, adjusting to new data in ways humans might not. AI recommendation systems, like those used by streaming services, continually refine suggestions based on user behavior.

- Goal-Driven Optimization: AI is designed to optimize specific outcomes, whereas humans might juggle multiple, sometimes conflicting, objectives. In logistics, AI optimizes routes for efficiency, while humans might prioritize comfort or familiarity.

- Emotional Detachment: AI systems operate without emotional interference, enabling them to make impartial decisions:

- Impartiality: AI lacks emotions (so far…), allowing for objective decision-making free from fear, stress, or other emotional influences that can impair human judgment. In legal applications, AI can analyze evidence and precedents without prejudice, although ethical oversight is required to ensure fairness. AI systems operate without emotional interference, enabling them to make impartial decisions.

- Data-Driven vs. Experience-Driven Decisions: AI’s reliance on data ensures decisions grounded in current evidence, unlike humans who often depend on intuition or outdated experiences:

- Evidence-Based: AI relies strictly on data, while humans might rely on intuition or past experiences that might not be applicable to the current situation. In stock market analysis, AI can process current market trends more effectively than relying on outdated human experiences.

- Updating Beliefs: AI can adjust to new data more readily than humans, who might cling to outdated beliefs. This adaptability allows AI to remain effective in rapidly changing environments.

- Limitations of Human Perception: Humans face natural constraints in processing and retaining large volumes of information, whereas AI operates without such limitations:

- Simultaneous Multitasking: Autonomous vehicles exemplify this by processing inputs from multiple sensors to make rapid, accurate decisions.

- Unwavering Attention: Unlike humans, AI does not experience lapses in focus, ensuring consistent monitoring and response capabilities. In surveillance, AI can monitor feeds continuously without fatigue.

But…

While AI demonstrates clear advantages, human qualities such as intuition, creativity, and ethical reasoning are key (so far…). Collaborative frameworks that leverage the strengths of both humans and AI are often the most effective:

- Human-AI Synergy: For example, in medical diagnostics, AI systems excel at pattern recognition, but a doctor’s experience and empathy remain essential for holistic patient care.

- Ethical Oversight: Ensuring that AI-driven systems align with ethical standards requires consistent human involvement, particularly to identify and mitigate biases within AI training data.

Despite its strengths, AI is not without its flaws, which must be addressed to maximize its potential:

- Bias in AI Systems: AI can inherit and perpetuate biases present in its training data. Recognizing and correcting these biases is essential to ensure fair and accurate outcomes.

- Contextual Understanding Deficiencies: AI struggles with interpreting nuances or context that are intuitive for humans. For example, in natural language processing, sarcasm or idiomatic expressions may be misunderstood, leading to errors.

Effective collaboration between humans and AI involves both technical and organizational improvements:

- Explainable AI (XAI): Developing transparent AI systems helps users understand how decisions are made, fostering trust and more informed decision-making.

- User Training and Interface Design: Investing in user education and creating intuitive AI interfaces can reduce cognitive load and enhance usability.

Although AI lacks emotional intelligence, human emotional capabilities are critical in certain scenarios:

- Emotional Intelligence: While AI lacks emotions, human emotional intelligence is crucial in areas like leadership, counseling, and negotiations where empathy and understanding are key.

- Ethical Judgments: Moral dilemmas require human values and reasoning that AI currently cannot replicate.

Conclusion

The precision, speed, and consistency of AI make it a powerful tool in decision-making. However, human creativity, ethical judgment, and emotional intelligence remain essential. By understanding and leveraging the respective strengths of AI and humans, we can create collaborative frameworks that maximize benefits across various sectors.

What Can You Do? Reflect on how to foster effective human-AI collaboration in your professional field. This could involve adopting new technologies, advocating for ethical AI practices, or investing in education and training to build a future where human and AI capabilities complement each other effectively.

PS: Article researched and written with the assistance of AI

Descubre más desde Irrational Investors

Suscríbete y recibe las últimas entradas en tu correo electrónico.