WHAT CO-INTELLIGENCE, BY ETHAN MOLLICK, TEACH US

If at this point, you’re still using generative artificial intelligence with no established criteria, you’re not just late to the party—you’re acting recklessly. That is, more or less implicitly, the first warning of Co‑Intelligence, a book that does not aim to dazzle you with AI and what it can do, but rather to teach you how to handle it, govern it, and ultimately live with it in different spheres. Because (spoiler) if you don’t design the relationship, it will design it for you.

«Assume that the AI you’re using today is the worst AI you’ll ever use from now on«

Mollick wastes no time on theoretical speculation; his proposal is direct, using generative models requires fewer debates about singularity and more professional tactics. It’s not about understanding the transformer architecture but about knowing what to ask, how to evaluate what you receive, and when to say, “This isn’t useful.”

The thesis is simple but uncomfortable, AI is no longer a novelty; it’s a condition of the environment, it’s the playing field. Every prompt you issue, every text you accept without cross‑checking, every decision you delegate without supervision is an act of algorithmic governance. And if that governance isn’t yours, it belongs to someone else.

The book is not a tour de force of what ChatGPT, Claude, Copilot, Gemini, or any other LLM (Large Language Model) can do. It is a guide to what you can do when interacting with machines that write at speed, with confidence, and with a plausibility that is, to say the least, unsettling. The risk is no longer that AI “fails,” but that you don’t know how to tell when it fails.

The author introduces four rules that are not intended to be universal laws but heuristic principles for navigating the human‑AI relationship through real‑world experience and critical iteration. Instead of proposing a closed normative framework, he offers a kind of “survival manual” for knowledge professionals and for everyone:

- Always Invite the Alien. Co‑intelligence is neither automatic nor passive. You must summon AI as an active interlocutor.

- Be the Human in the Loop. Human presence is not decorative; it is indispensable. Generative models make mistakes, “hallucinate,” and lack deep contextual understanding. Therefore, the human must always be the verifier, the editor, the critic.

- Treat AI Like a Person (But Don’t Forget It’s Not One). Many users interact with models as if they were subjects, they greet, thank, apologize. Far from censuring that behavior, Mollick champions it as a way to establish a productive mental framework. By treating AI as a person, the user structures prompts better, makes context explicit, and demands more clarity.

- Assume It’s Capable of Anything (Because It Is). The model does not have a finite list of functions. Its behavior is emergent and can therefore surprise us. Mollick suggests that co‑intelligence only flourishes when we stop thinking in terms of “predefined uses” and start testing, combining, iterating.

Mollick then structures his proposal around five functional roles AI can assume in daily life: person, creative, colleague, tutor, and coach. And this is not a purely decorative analysis; it is, above all, a strategic approach:

- As a person, knowing that it isn’t one, but treating it as if it were, which somehow activates co‑intelligence. The chapter offers strategies to design personalized prompts, adjust the tone of interaction, and detect deviations in the simulated person. It also introduces the idea of “counter‑personas”, very useful at times.

- As a creative, it helps you confront the “blank page,” but it won’t do everything, nor is everything it does valid. As the book says, AI is not creative in itself, but it can be the spark that ignites human creativity.

- As a colleague, it invites you to debate what it generates, not to accept it; it invites you to collaborate. The future of work is not human or machine but human‑with‑machine. And in that “with” lies the key to everything; it is there that the quality, equity, and sustainability of the new organizational model are at stake. Co‑intelligence, in this context, becomes not only desirable but inevitable.

- As a personalized tutor, it guides you and offers tailored information, but only if you know how to ask for it. AI as a tutor is not only an encyclopedia; it is also an educational interlocutor. The model’s flexibility allows tone, level, pace, and pedagogical focus to be adapted. For all these reasons, the author asserts, if AI can explain, assess, and accompany, then access to a tutor should not be a privilege, but a right.

- As a coach, it can help you think better, but never think for you. Mollick explores AI’s function as a coach, not to teach content but to catalyze processes of reflection, continuous improvement, and decision‑making. The model acts as a mirror, a sounding board, an agent that asks questions and accompanies, and as such a mirror can amplify biases.

One sentence sums up his approach (and we are witnessing it practically every week): “Assume that the AI you use today is the worst you will ever use from now on.” If you don’t learn now, you already start at a disadvantage, because what is coming in the immediate future will be more powerful, faster, and—if you lack judgment—also darker.

The underlying thesis is not technological but contextual, using AI is no longer a technical skill; it is a transversal professional competence and an obligation—something like reading well, structuring a decision, knowing how to present and speak in public, having good judgment and critical thinking, etc.

«If you’re not using AI, someone else is. And that someone is learning faster than you.»

According to Mollick, AI is not replacing human thought; it is reconfiguring its starting point. And if you don’t update that starting point, it doesn’t matter what you know—what matters is what you do with a machine that responds with confidence even when it is wrong.

In education, this means stopping prohibition and starting to teach how to think with AI. In consulting, it means delivering more value thanks to iterating with models. In politics, it requires acknowledging that AI is already influencing the debate and that intervention is necessary. All of this, and more, demands urgent action, because AI not only offers opportunities but also entails risks—and serious ones.

Co‑Intelligence is not a manifesto, a statement of intent, or merely a witness of events. It is a toolbox. It does not want you to believe; it wants you to practice, to get up to speed. Its proposal is not dogmatic; it is pragmatic: AI does not decide for you, but it conditions how you decide. And that how can no longer be intuitive.

The book can be read in a short time, but it will be applied for months. It is useful for teams that need protocols, for trainers who do not want to infantilize, for executives and leaders who do not want to be left out. And for anyone who takes their relationship with knowledge seriously. That is why it is not an optional read. If you lead, teach, decide, or design, you need a strategy for interacting with generative AI. This book is an excellent first step.

And the final questions? Easy:

- What are those already making decisions around you doing with AI? Because if they are learning and you are not, you are already at a disadvantage.

- And if the problem isn’t AI but your lack of criteria for using it?

PS: This article has been improved with AI assistance.

BONUS

- PODCAST (Done with NotebookLM)

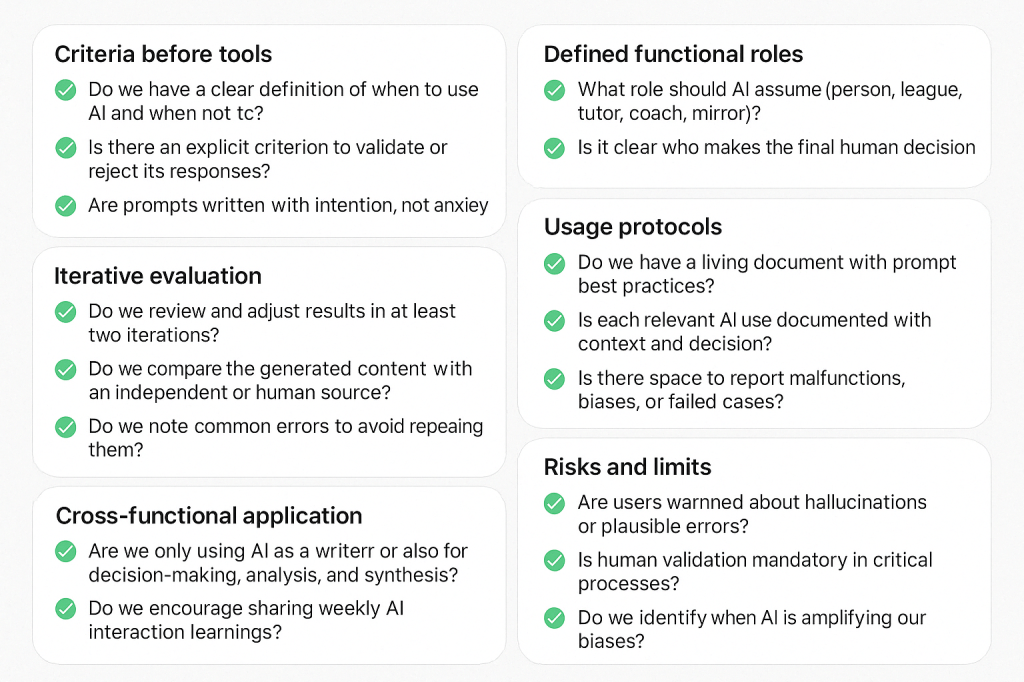

- OPERATIONAL CHECKLIST (for teams integrating generative AI into their daily work)

Descubre más desde Irrational Investors

Suscríbete y recibe las últimas entradas en tu correo electrónico.